Machine learning is now capable of writing reviews that real people find believable and useful. But the same technology is being used to fight those fake reviews. Who is likely to win the cold war for automated content, when the stakes are so high?

If you skip past the article to the comments, or past an Amazon product description to the user-reviews, or check out what others have said about a restaurant or hotel that you’re considering using, you may be one of the vast majority that are more interested in anecdotal evidence than official opinion.

It’s a controversial trend: faith in the opinion of our peers has been cited both as a causative factor in the spread of fake news, and a democratic antidote to unpopular policies from government and industry.

Either way, it’s big business. Some of the best-known and most profitable sites in the world have founded their value on the contributions of their readers and subscribers.

Social media channels such as Facebook and Twitter, review platforms like TripAdvisor, and online marketplaces such as Amazon, eBay, and Alibaba all rely on user-contributed content to drive traffic and conversions.

Their credibility is under constant systemic, high-volume attack. Perhaps, soon, even by artificial intelligence.

The industrialization of the fake review

The problem of self-interested and insincere reviews dates at least as far back as the 19th century but came to prominence in the internet age in the context of the videogame industry and large-scale online merchants such as Amazon.

In October of 2018 a study by Which? revealed the current industrial scale of crowdturfing, highlighting the existence of dedicated Facebook groups that act as employment hubs for fake reviewers. Some of the groups involved have up to 87,000 members.

Prior investigations have indicated gig-work portals such as Freelancer and Fiverr as major commissioning centres for fake reviews. One joint research paper from 2014 estimated the number of low-paid crowdturfing gigs at over 4.3 million instances over a two-month period — with ninety percent of the site’s top ten sellers engaged in the practice.

Despite the publicity, the highest-earning organisation in the report’s findings continued to operate on Fiverr until March of 2018, when it fell foul of a purge on crowdturfing companies.

The listings site Yelp has long known it had a problem with crowdturfed reviews, pursuing several major prosecutions, and dedicating significant investigative resources to the issue.

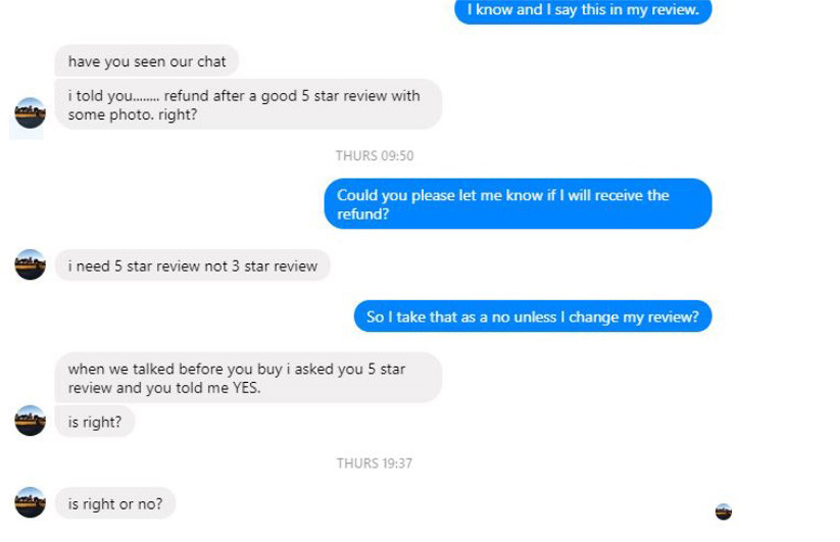

Amazon has also taken legal action against fake reviewers, and in 2016 even banned the widespread practice of ”incentivized reviews,” where shoppers trade favourable reviews for free products.

However, it transpires that the global retailer may have some moral flexibility on fake reviews, so long as it can directly benefit from them.

Artificial reviews via artificial intelligence

In 2017, a research project from the University of Chicago illustrated that an artificial intelligence trained on a dataset of actual reviews from yelp.com was able to write machine-generated reviews that readers could not distinguish from genuine human reviews in terms of general usefulness.

The AI-based reviews are created by analysing real reviews on a per-character (not per-word) basis, generating completely unique text strings that won’t get flagged by online plagiarism checks. One of the report’s authors believes that the implications of the trend are widespread, characterisingthese capabilities as a “threat towards society at large”..

Though the robot reviews often demonstrate eccentricities or a shortcoming of language use, so does the source material that informs them (in this case, a database that Yelp makes available to data scientists).

Those who contribute to English-language forums, comments, and reviews may not be native speakers, may be semi-literate, or just plain lazy – possibly all three. Therefore using a standard of well-constructed language as an authenticity test against AI-based crowdturfing seems unlikely to be effective.

Though the ability of a machine learning system to imitate human output remains in question; the state of the art seems to be enough to get this particular job done, at least for the time being.

AI-driven reviews in the wild?

Machine learning is more publicly engaged in fighting fake reviews than creating them. The site fakespot.com uses algorithms driven by machine learning to help users determine the authenticity of reviews. Cornell University runs a fake review resource called Review Skeptic.

Researchers are using ontology, blockchain, language context, low post-counts, unusually high review ratings, semantic similarity and pronoun use as approaches to the problem of fake reviews.

Additionally, the authors of the AI-based fake review generator mentioned above claimed in their study that the technique is as useful in identifying machine-written reviews as in creating them.

However, more recent research from Finland claims to have improved on their work, with a modified system that creates reviews that are more authentic and more difficult to spot.

The Finnish research team added a supervising layer of neural machine translation to help the AI focus on the core objective of the review, instead of letting unrelated keywords divert its attention.

By using a text-based template based on this metadata (review rating, restaurant name, city, state, and food tags), the revised technique produced reviews that fooled up to 60% of human readers.

The original Chicago paper authors declare that they have found no evidence of their work being deployed for real-world use (though it currently shows up publicly forked twice on GitHub); and even a casual browse of the major gig-writing sites demonstrates that demand for low-paid, real-world fake review writers is still very high.

Where does AI stand in the content-generation market?

There is little evidence that effective machine review systems are currently available to buy for black-hat marketers in the same way that zombie botnets can be purchased for click-fraud,

Denial of Service (DoS) attacks and email spam campaigns. Most of the more mature machine learning analysis systems aimed at detecting fraudulent user content are proprietary and jealously guarded by AI development companies.

In this particular case, the benefits of open source collaboration are likely outweighed by the need to conceal techniques from those who would exploit them.

One subset of the AI war around “false” content is in the area of rewriting systems. Article spinners, which can be online services or locally-installed programs, make use of machine learning-derived algorithms to rephrase existing content so that users can repost it for their own marketing purposes, without paying royalties, being sued by content owners or being flagged for content theft by search engines.

Content spinners operate in a grey area of legality, since even the most esteemed news networks have always supplemented completely original work with rewrites of articles, which other publications originated as valid news.

Citation of sources is a standard topic in journalistic ethics. Some wonder if automating the process is an obvious and valid evolution of this practice, and part of a trend towards AI-driven journalism.

In terms of whether or not robot rewrites can help a company’s SEO, opinion is divided.

On one hand, Google sometimes seems to reward the newest content on a topic, even if it derives from original material from another domain, and even if this expressly violates Google’s own rules about duplicate content. Whether or not spun content is regarded by the search giant as “duplicate content” seems up for debate.

Authenticity as the new online currency

The study of word patterns is a core pursuit in machine learning, and new breakthroughs seem likely to fuel new policies over the next few years.

Those whose business depends on Google search placement should be aware that the search giant is also one of the biggest AI investors on the planet. Moreover, it is actively looking to weed out imitative, low effort content from its core search product.

History suggests that a company currently benefitting from black-hat SEO such as spun content and fake reviews can expect an SEO catastrophe eventually.

At the heart of the continuing mania to curate lists of content in more and more personalised ways without ever creating anything new, the persistent value of original, human-written content has survived all the trends and purges of the last twenty years in a way that workarounds, cheats, and hacks have never been able to match.

Therefore founding a business model on deceptive or artificial content would seem to be a short-sighted strategy.